Conjecture internal survey: AGI timelines and probability of human extinction from advanced AI

Conjecture internal survey: AGI timelines and probability of human extinction from advanced AI

Conjecture internal survey: AGI timelines and probability of human extinction from advanced AI

Conjecture

May 22, 2023

This survey was conducted and analysed by Maris Sala.

We put together a survey to study the opinions of timelines and probability of human extinction of the employees at Conjecture. The questions were based on previous public surveys and prediction markets, to ensure that the results are comparable with people’s opinions outside of Conjecture.

The survey results were polled in April, 2023. There were 23 unique responses from people across teams.

Section 1. Probability of human extinction from AI

Setup and limitations

The specific questions the survey asked were:

What probability do you put on human inability to control future advanced A.I. systems causing human extinction or similarly permanent and severe disempowerment of the human species?

What probability do A.I. systems causing human extinction or similarly permanent and severe disempowerment of the human species in general (not just because inability to control, but also stuff like people intentionally using AI systems in harmful ways)?

The difference between the two questions is that the first focuses on risk from misalignment, whereas the second captures risk from misalignment and misuse.

The main caveats of these questions are the following:

The questions were not explicitly time bound. I'd expect differences in people’s estimates of risk of extinction this century, in the next 1000 years, and anytime in the future. The longer of a timeframe we consider, the higher the values would be. I suspect employees were considering extinction risk roughly within this century when answering.

The first question is a subset of the second question. One employee gave a higher probability for the second question than the first; this was probably a misinterpretation.

The questions factor in interventions such as how Conjecture and others’ safety work will impact extinction risk. The expectation is the numbers would be higher if factored out their own or others’ safety work.

Responses

Out of the 23 respondents, one rejected the premise, and two people did not respond to one of the two questions but answered the other one. The main issue respondents raised was answering without a time constraint.

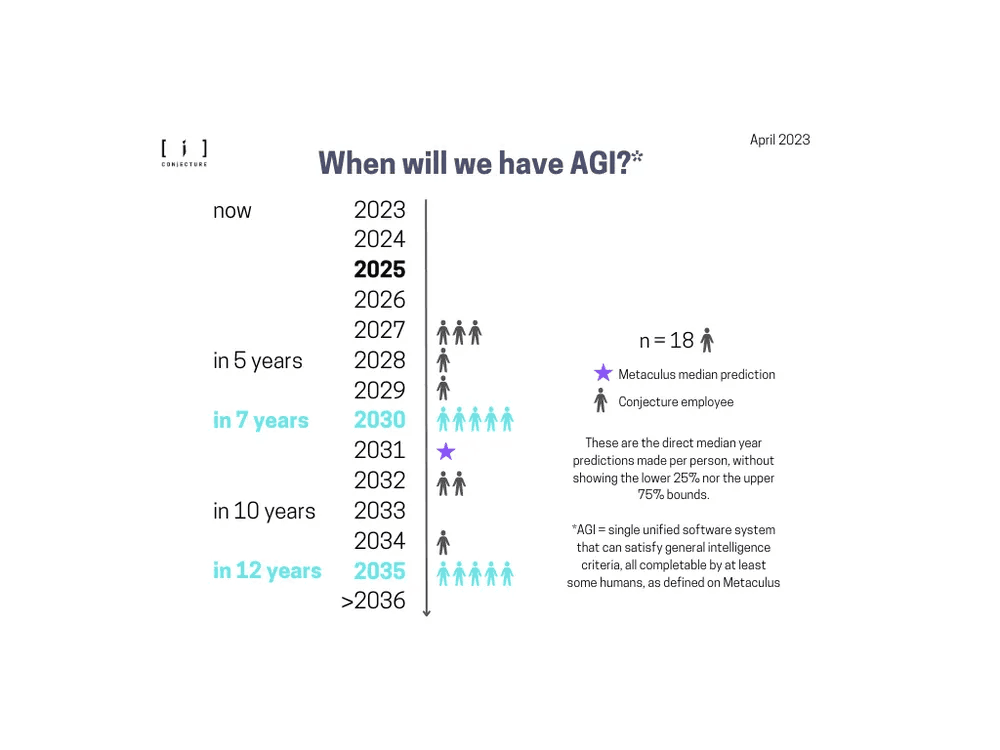

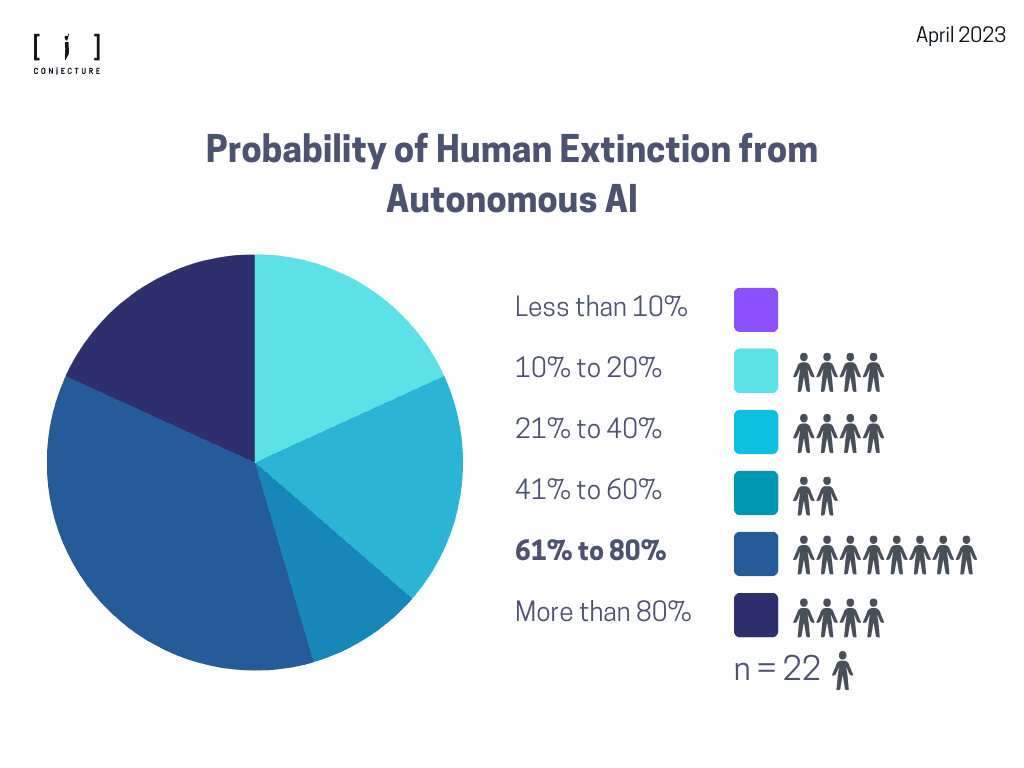

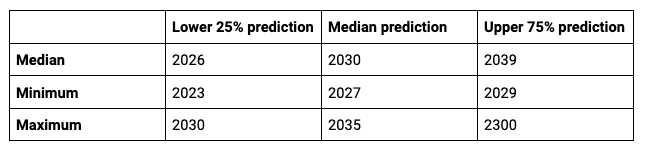

Figure 1. Probability of human extinction from autonomous AI. N = 22. No one reported less than a 10% chance. Most people reported over a 60% chance.

Generally, people estimate the extinction risk from autonomous AI / AI getting out of control to be quite high at Conjecture. The median estimation is 70% and the average estimation is 59%. The plurality estimates the risk to be between 60% to 80%. A few people believe extinction risk from AGI is higher than 80%.

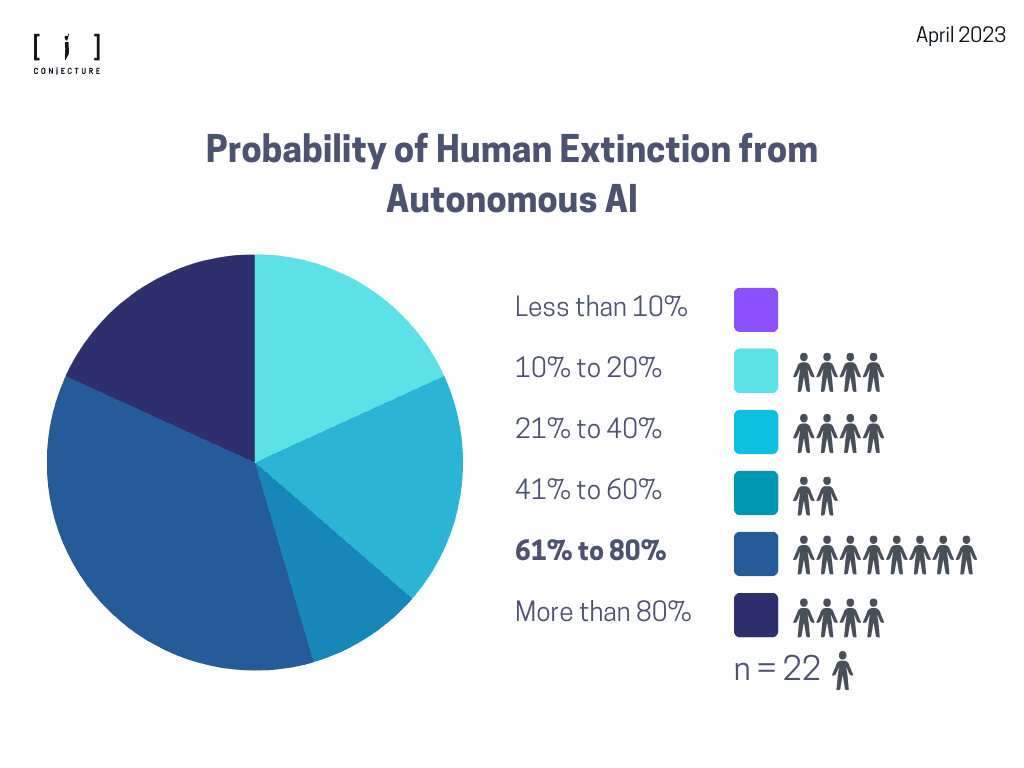

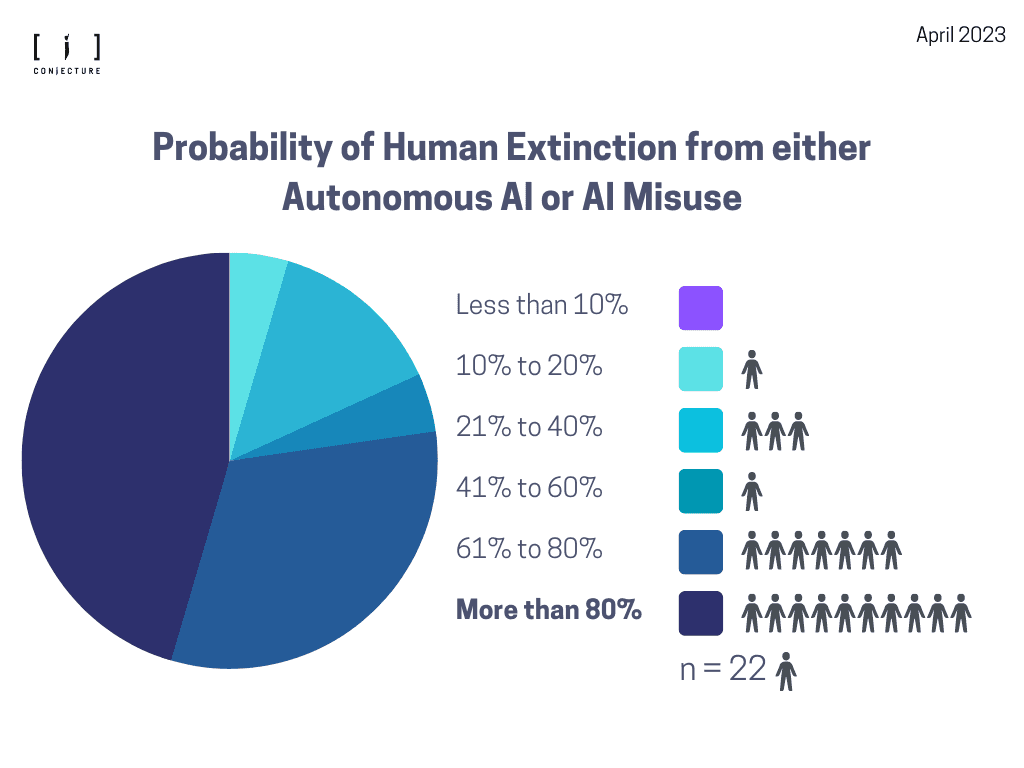

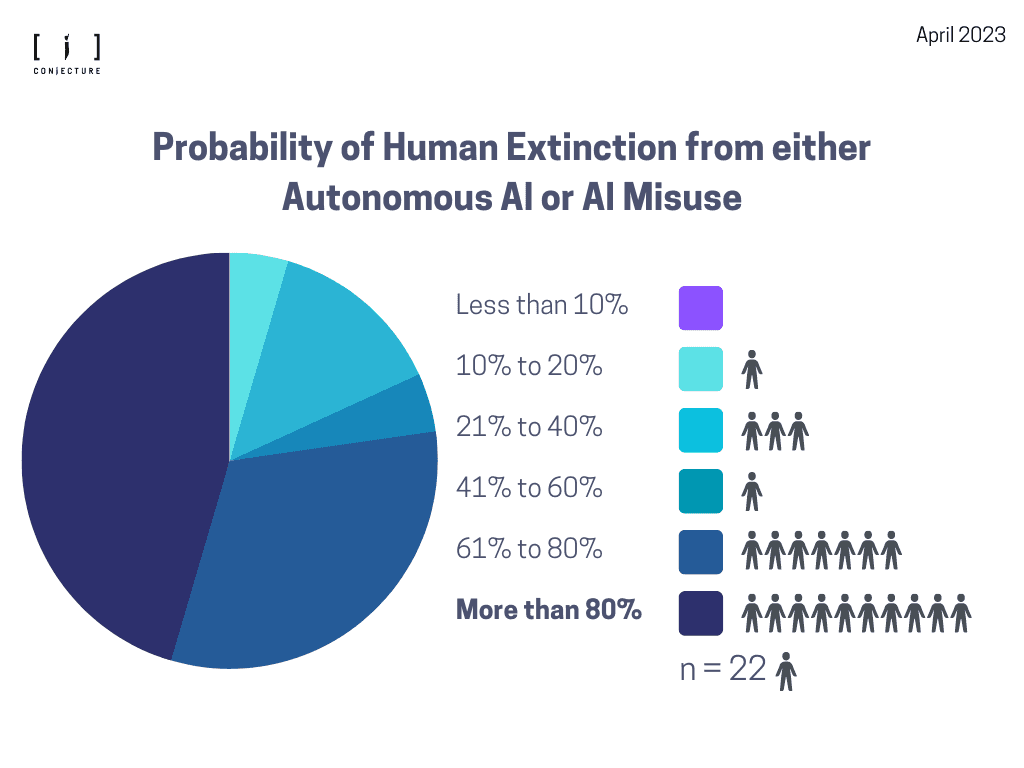

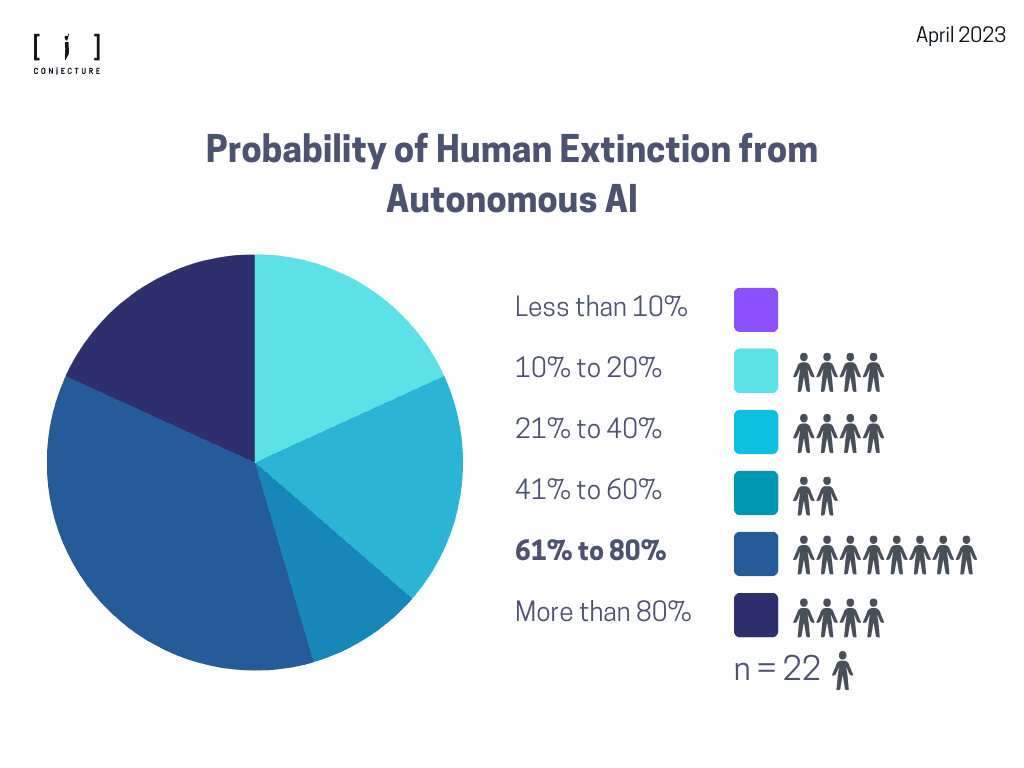

Figure 2. Probability of human extinction from either autonomous AI or AI misuse. N = 22. No one reported less than a 10% chance. Most people reported over a 60% chance. Three people reported an over 90% chance (not shown in Figure directly).

The second question surveying extinction risk from AI in general, which includes misalignment and misuse. The median estimate is 80% and the average is 71%. The plurality estimates the risk to be over 80%.

Section 2. When will we have AGI?

Setup and limitations

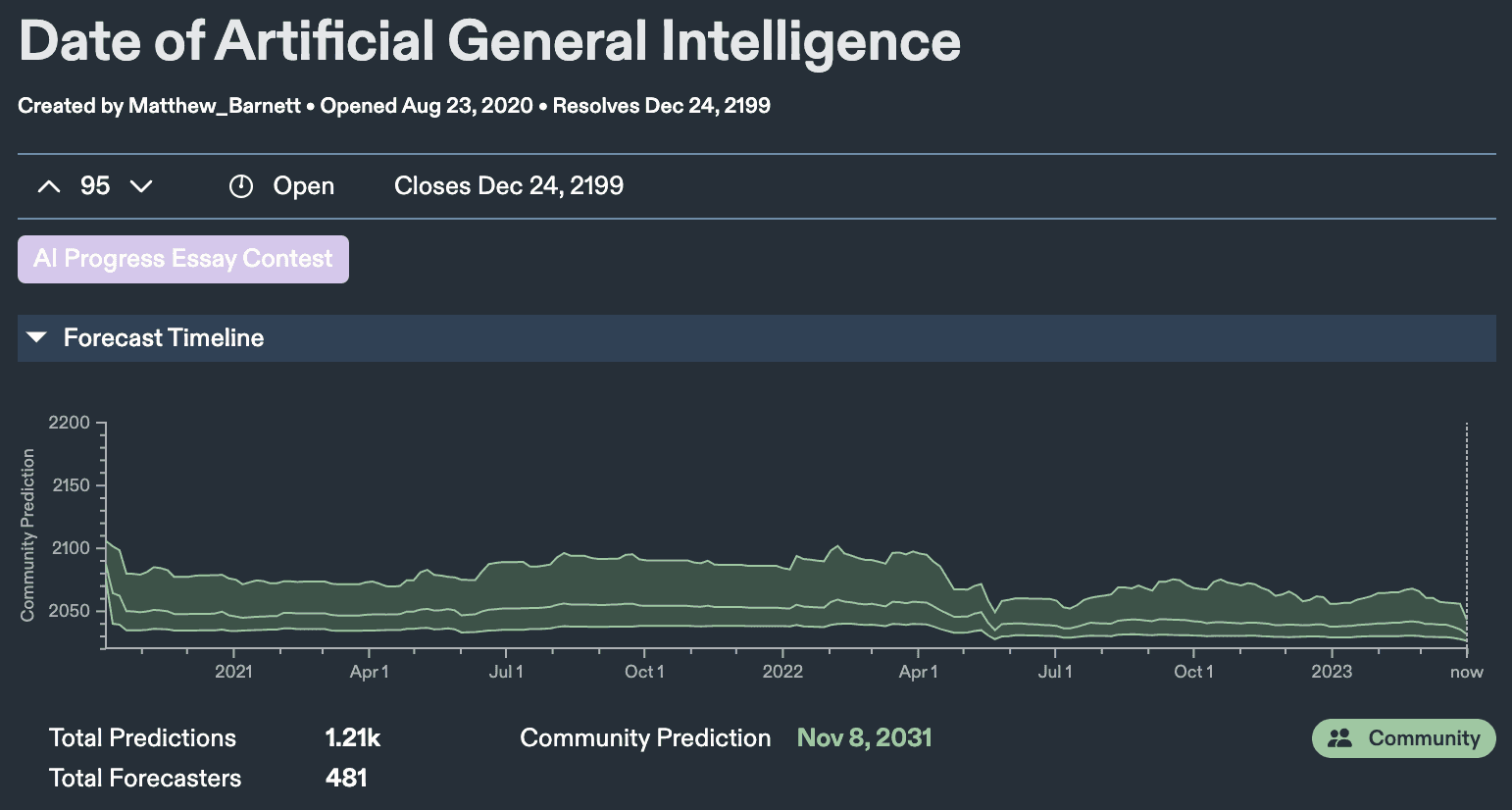

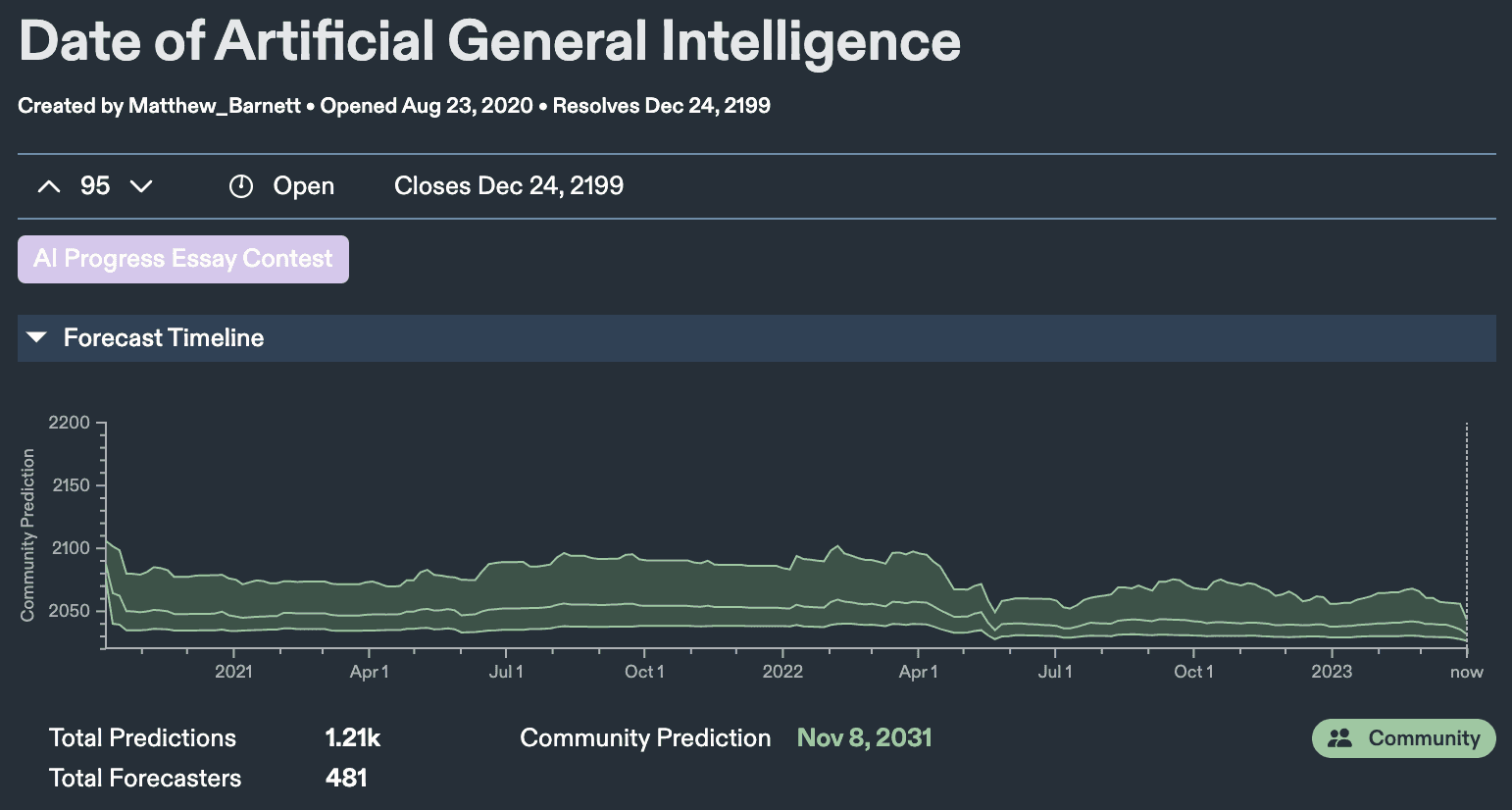

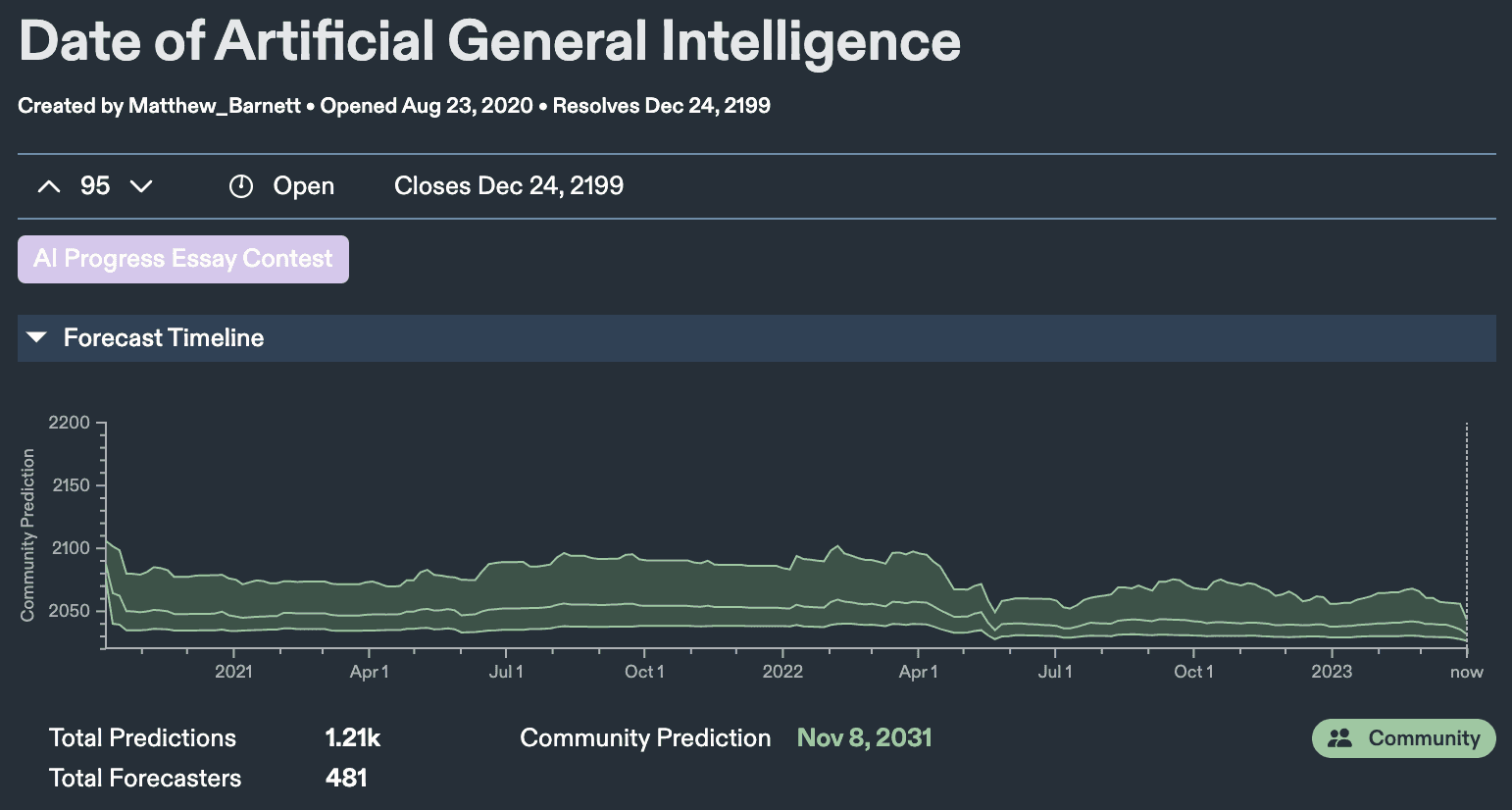

For this question, we asked respondents to predict when AGI will be built using this specification used on Metaculus, enabling us to compare to the community baseline (Figure 3).

Figure 3. Date of Artificial General Intelligence - screenshot from Metaculus’s specification (April, 2023).

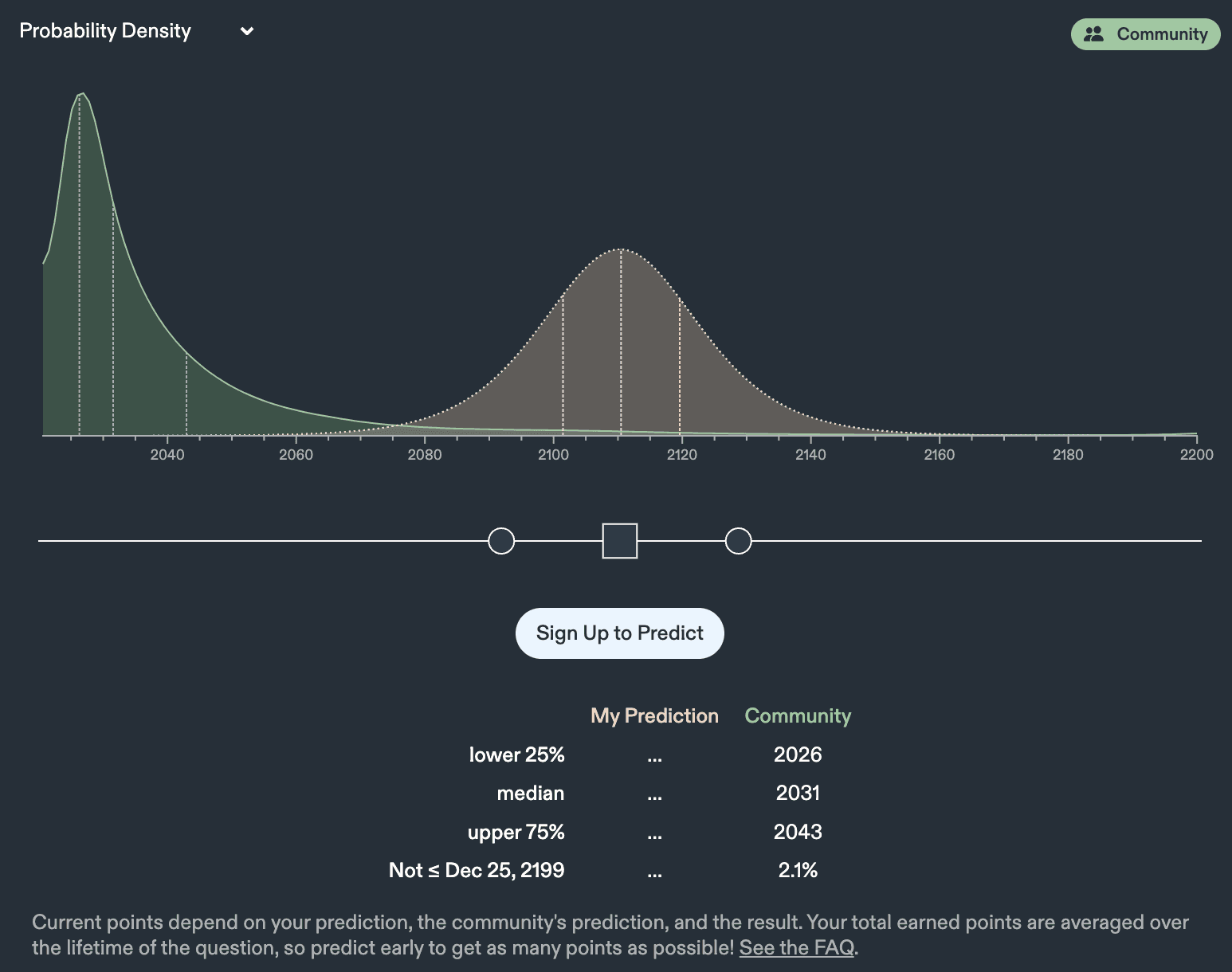

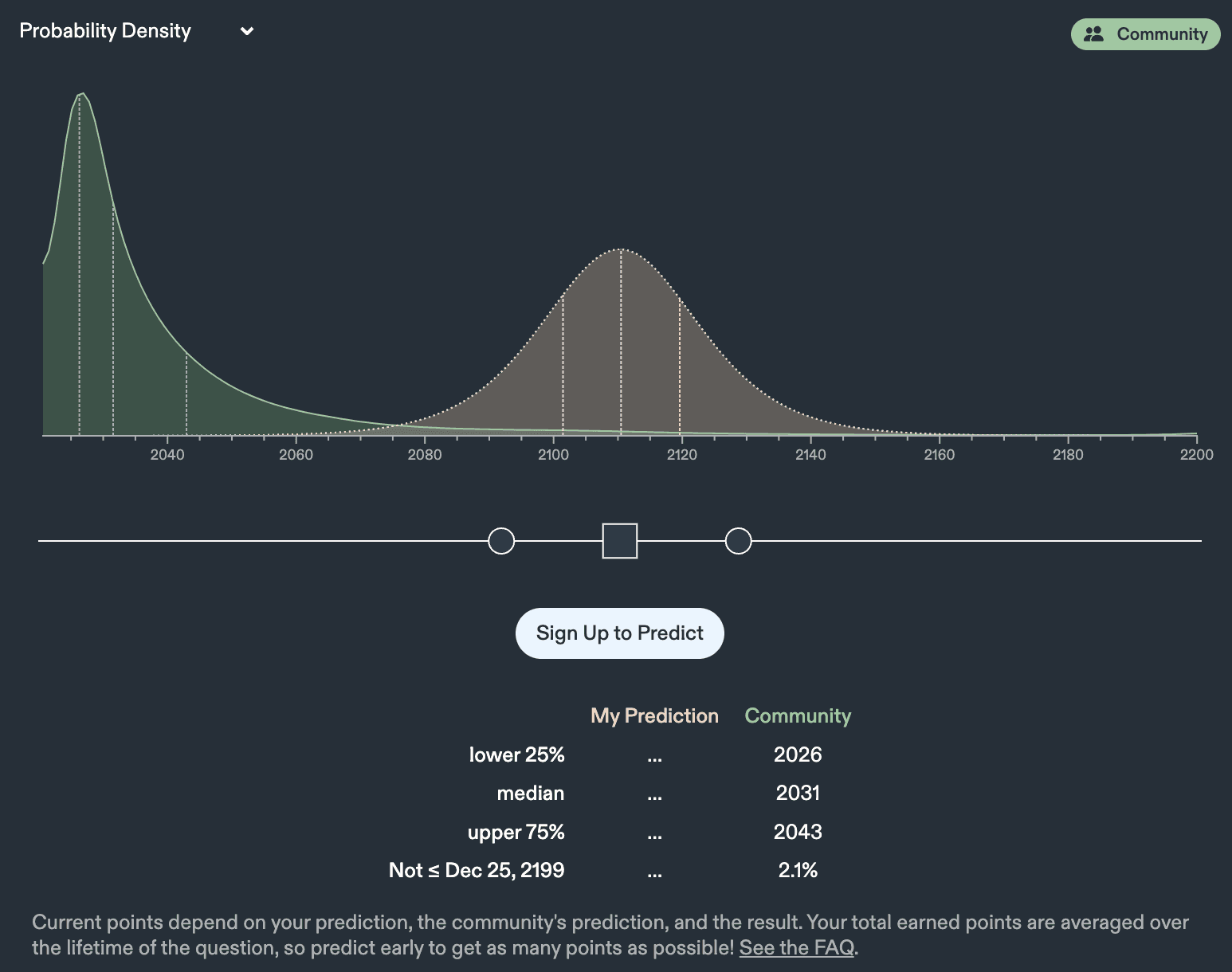

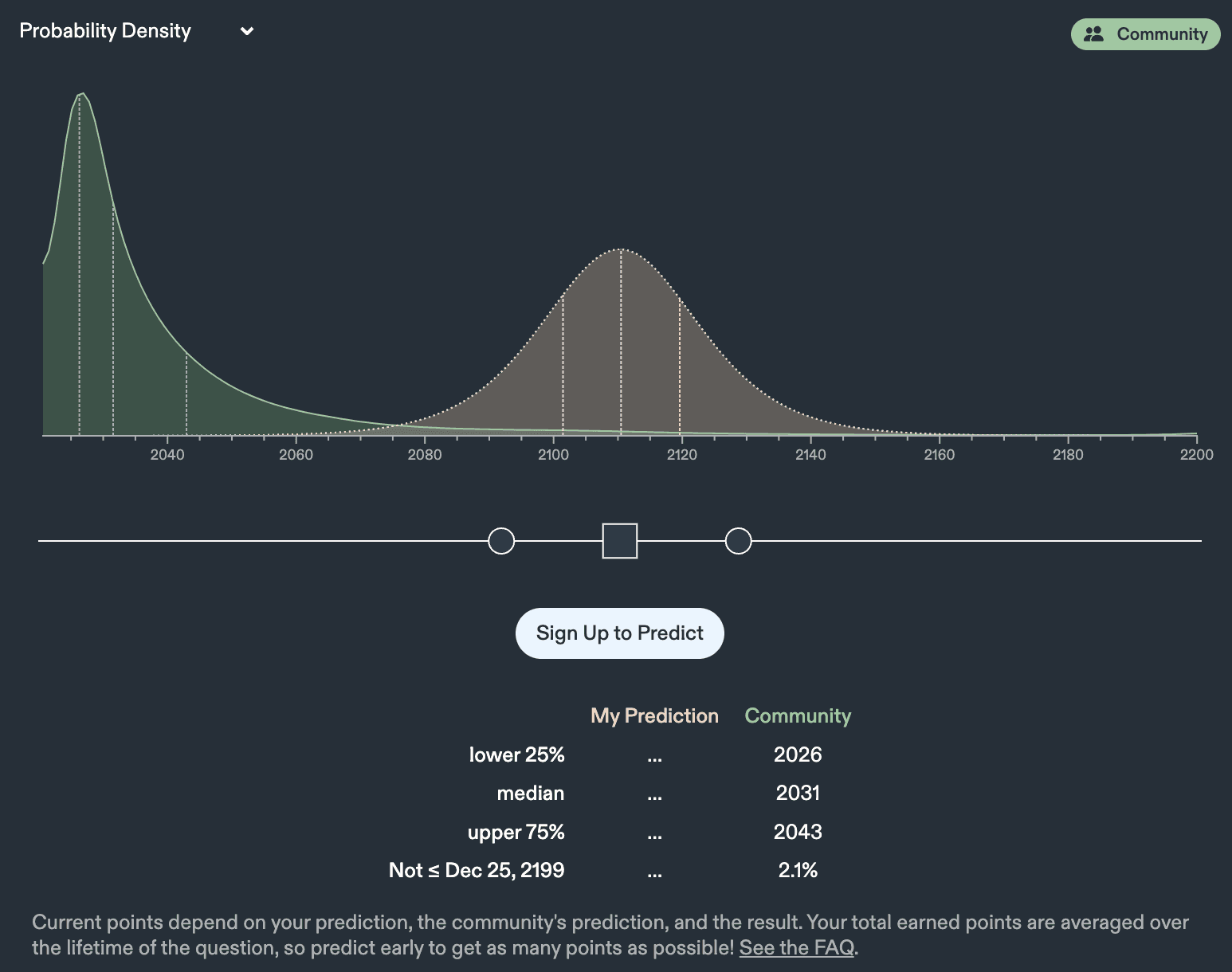

The respondents were instructed to toggle with the probability density as seen in Figure 4. This was a deliberate choice to enable differences in confidence towards lower or higher values in uncertainty.

Figure 4. Date of Artificial General Intelligence - screenshot from Metaculus’s specification showing the toggle option people have for making their estimations (April, 2023).

The main caveats of this question were:

The responses are probably anchored to the Metaculus community prediction. The community prediction is 2031: 8 year timelines. Conjecture responses centering around a similar prediction should not come as a surprise.

The question allows for a prediction that AGI is already here. It’s unclear that respondents paid close attention to their lower and upper predictions to ensure that both are accordingly sensible. They probably focused on making their median prediction accurate, and might not have noticed how that affected lower and upper bounds.

Responses

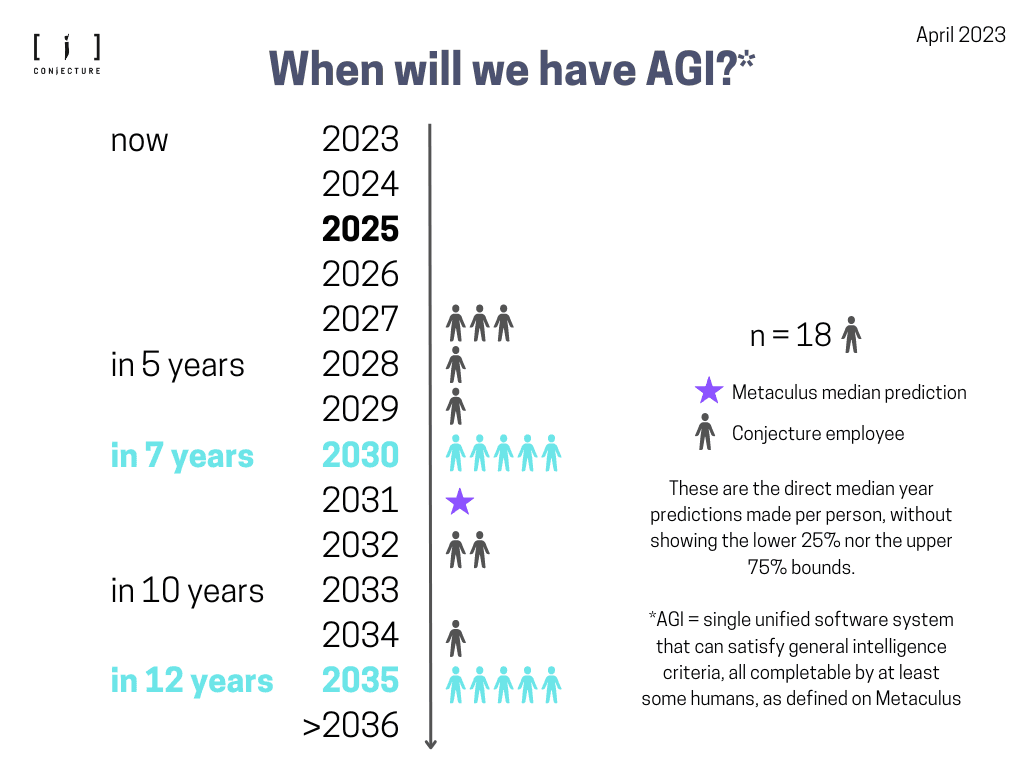

Out of the 23 respondents, five did not answer this question, out of which one person rejected the premise. This resulted in 18 responses that were counted in the analysis.

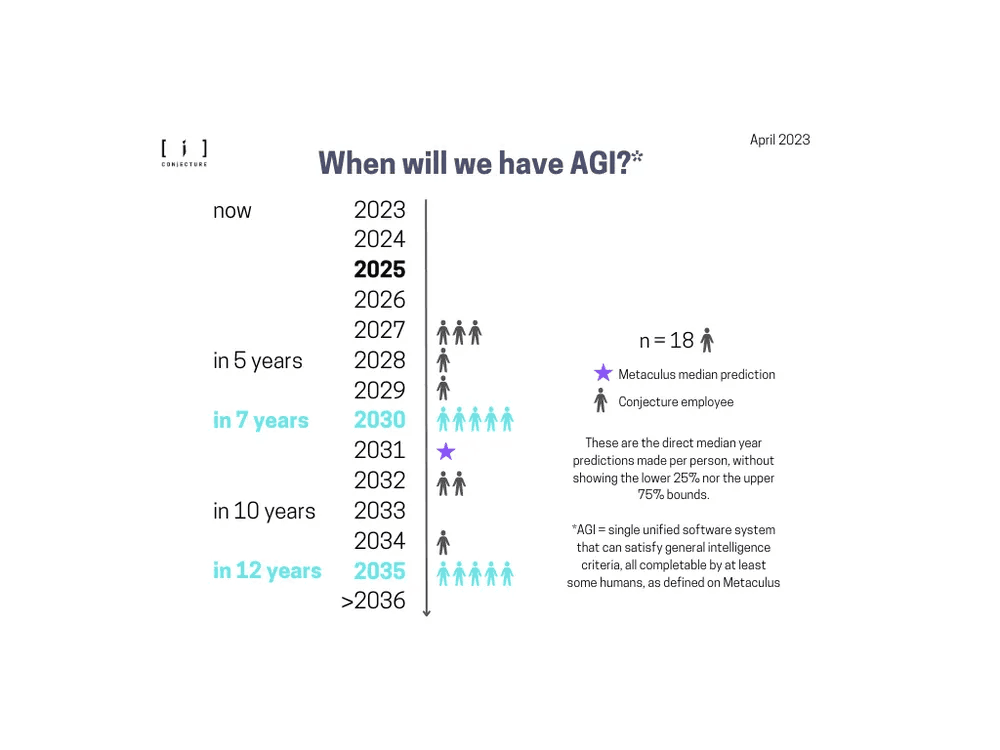

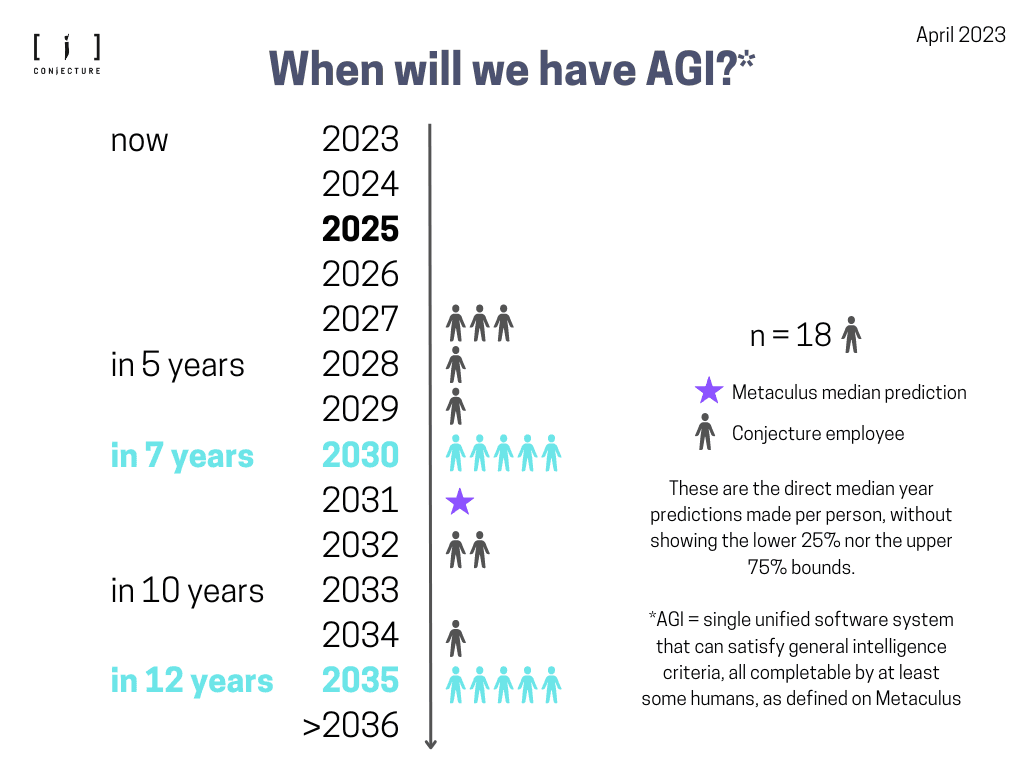

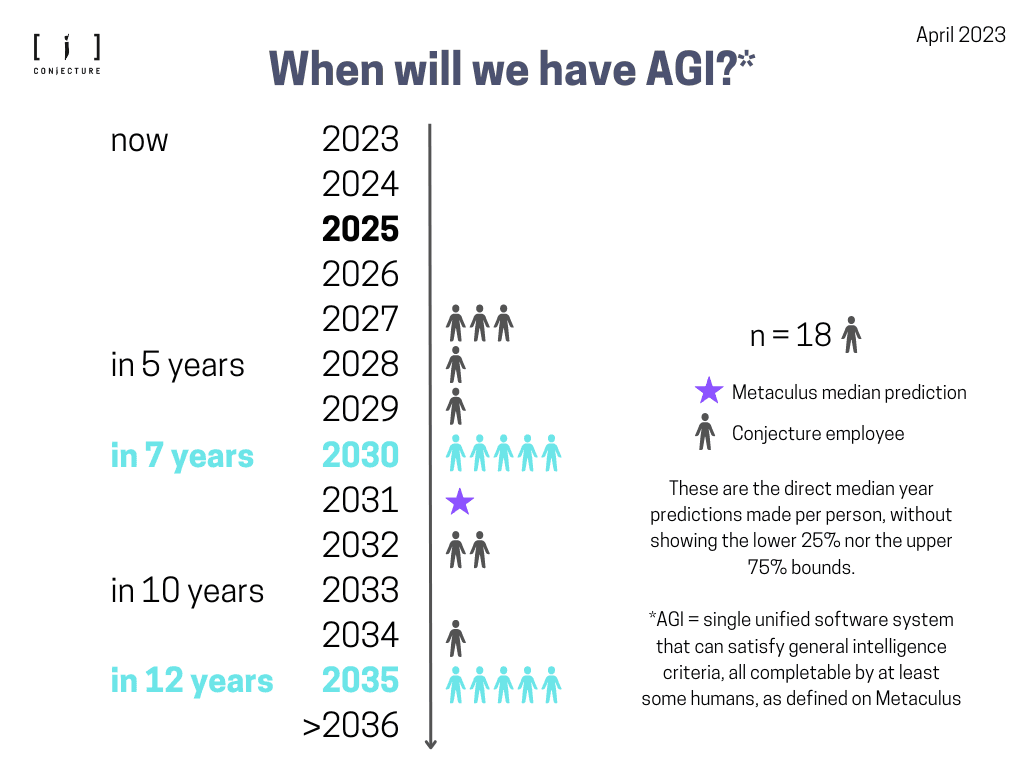

Figure 5. When will we have AGI? (N = 18). People’s estimate of the median year they expect to have AGI has been visualized. Their lower 25% nor upper 75% bound has not been included. The purple star indicates the median prediction made by Metaculus users at the time the survey was conducted. The two most popular responses include the year 2030 (7 year timelines) and the year 2035 (12 year timelines).

Conjecture employees’ timelines are somewhat bimodal (Figure 5). Most people people answered either 2030 (7 year until AGI) or 2035 (12 years until AGI). The Metaculus community prediction at the time of the survey was 2031; respondents were likely anchored by this.

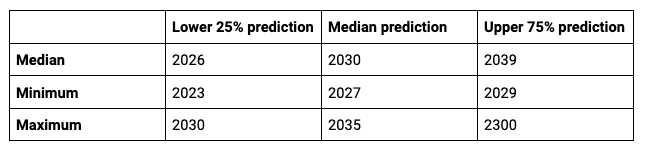

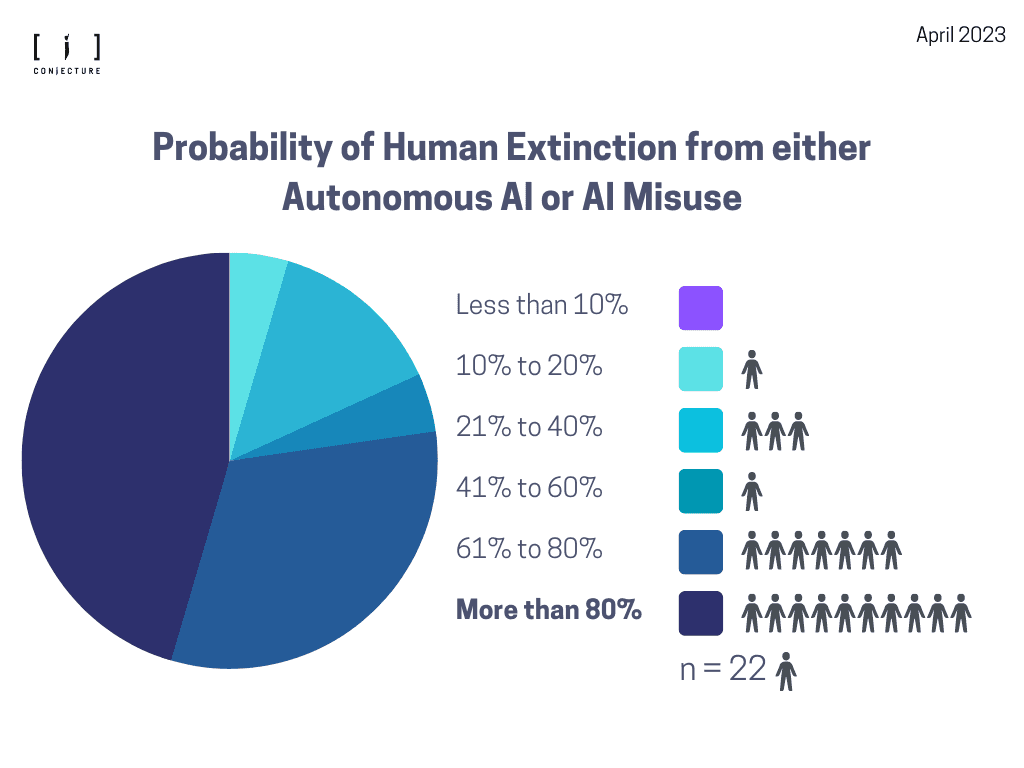

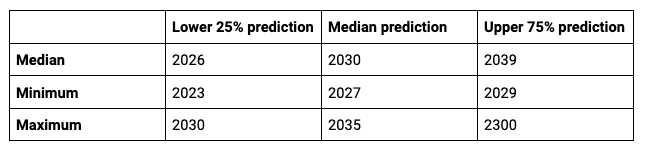

Table 1. Overview of additional statistics for when we will have AGI.

Table 1 shows additional markers for what the summary statistics look like across all respondents for the lower and upper bound predictions. Notably, the lower end of possible years where we will have AGI is maximum in the year 2030, which is still shorter timelines than what Metaculus users report as their overall median. The median prediction varies from 2027 to 2035. In terms of the upper 75% bound, the median prediction is the year 2039 but it varies all the way from 2029 to 2300, showing that the uncertainty towards the further end of the distribution is higher than for the years closer to 2023.

This survey was conducted and analysed by Maris Sala.

We put together a survey to study the opinions of timelines and probability of human extinction of the employees at Conjecture. The questions were based on previous public surveys and prediction markets, to ensure that the results are comparable with people’s opinions outside of Conjecture.

The survey results were polled in April, 2023. There were 23 unique responses from people across teams.

Section 1. Probability of human extinction from AI

Setup and limitations

The specific questions the survey asked were:

What probability do you put on human inability to control future advanced A.I. systems causing human extinction or similarly permanent and severe disempowerment of the human species?

What probability do A.I. systems causing human extinction or similarly permanent and severe disempowerment of the human species in general (not just because inability to control, but also stuff like people intentionally using AI systems in harmful ways)?

The difference between the two questions is that the first focuses on risk from misalignment, whereas the second captures risk from misalignment and misuse.

The main caveats of these questions are the following:

The questions were not explicitly time bound. I'd expect differences in people’s estimates of risk of extinction this century, in the next 1000 years, and anytime in the future. The longer of a timeframe we consider, the higher the values would be. I suspect employees were considering extinction risk roughly within this century when answering.

The first question is a subset of the second question. One employee gave a higher probability for the second question than the first; this was probably a misinterpretation.

The questions factor in interventions such as how Conjecture and others’ safety work will impact extinction risk. The expectation is the numbers would be higher if factored out their own or others’ safety work.

Responses

Out of the 23 respondents, one rejected the premise, and two people did not respond to one of the two questions but answered the other one. The main issue respondents raised was answering without a time constraint.

Figure 1. Probability of human extinction from autonomous AI. N = 22. No one reported less than a 10% chance. Most people reported over a 60% chance.

Generally, people estimate the extinction risk from autonomous AI / AI getting out of control to be quite high at Conjecture. The median estimation is 70% and the average estimation is 59%. The plurality estimates the risk to be between 60% to 80%. A few people believe extinction risk from AGI is higher than 80%.

Figure 2. Probability of human extinction from either autonomous AI or AI misuse. N = 22. No one reported less than a 10% chance. Most people reported over a 60% chance. Three people reported an over 90% chance (not shown in Figure directly).

The second question surveying extinction risk from AI in general, which includes misalignment and misuse. The median estimate is 80% and the average is 71%. The plurality estimates the risk to be over 80%.

Section 2. When will we have AGI?

Setup and limitations

For this question, we asked respondents to predict when AGI will be built using this specification used on Metaculus, enabling us to compare to the community baseline (Figure 3).

Figure 3. Date of Artificial General Intelligence - screenshot from Metaculus’s specification (April, 2023).

The respondents were instructed to toggle with the probability density as seen in Figure 4. This was a deliberate choice to enable differences in confidence towards lower or higher values in uncertainty.

Figure 4. Date of Artificial General Intelligence - screenshot from Metaculus’s specification showing the toggle option people have for making their estimations (April, 2023).

The main caveats of this question were:

The responses are probably anchored to the Metaculus community prediction. The community prediction is 2031: 8 year timelines. Conjecture responses centering around a similar prediction should not come as a surprise.

The question allows for a prediction that AGI is already here. It’s unclear that respondents paid close attention to their lower and upper predictions to ensure that both are accordingly sensible. They probably focused on making their median prediction accurate, and might not have noticed how that affected lower and upper bounds.

Responses

Out of the 23 respondents, five did not answer this question, out of which one person rejected the premise. This resulted in 18 responses that were counted in the analysis.

Figure 5. When will we have AGI? (N = 18). People’s estimate of the median year they expect to have AGI has been visualized. Their lower 25% nor upper 75% bound has not been included. The purple star indicates the median prediction made by Metaculus users at the time the survey was conducted. The two most popular responses include the year 2030 (7 year timelines) and the year 2035 (12 year timelines).

Conjecture employees’ timelines are somewhat bimodal (Figure 5). Most people people answered either 2030 (7 year until AGI) or 2035 (12 years until AGI). The Metaculus community prediction at the time of the survey was 2031; respondents were likely anchored by this.

Table 1. Overview of additional statistics for when we will have AGI.

Table 1 shows additional markers for what the summary statistics look like across all respondents for the lower and upper bound predictions. Notably, the lower end of possible years where we will have AGI is maximum in the year 2030, which is still shorter timelines than what Metaculus users report as their overall median. The median prediction varies from 2027 to 2035. In terms of the upper 75% bound, the median prediction is the year 2039 but it varies all the way from 2029 to 2300, showing that the uncertainty towards the further end of the distribution is higher than for the years closer to 2023.

This survey was conducted and analysed by Maris Sala.

We put together a survey to study the opinions of timelines and probability of human extinction of the employees at Conjecture. The questions were based on previous public surveys and prediction markets, to ensure that the results are comparable with people’s opinions outside of Conjecture.

The survey results were polled in April, 2023. There were 23 unique responses from people across teams.

Section 1. Probability of human extinction from AI

Setup and limitations

The specific questions the survey asked were:

What probability do you put on human inability to control future advanced A.I. systems causing human extinction or similarly permanent and severe disempowerment of the human species?

What probability do A.I. systems causing human extinction or similarly permanent and severe disempowerment of the human species in general (not just because inability to control, but also stuff like people intentionally using AI systems in harmful ways)?

The difference between the two questions is that the first focuses on risk from misalignment, whereas the second captures risk from misalignment and misuse.

The main caveats of these questions are the following:

The questions were not explicitly time bound. I'd expect differences in people’s estimates of risk of extinction this century, in the next 1000 years, and anytime in the future. The longer of a timeframe we consider, the higher the values would be. I suspect employees were considering extinction risk roughly within this century when answering.

The first question is a subset of the second question. One employee gave a higher probability for the second question than the first; this was probably a misinterpretation.

The questions factor in interventions such as how Conjecture and others’ safety work will impact extinction risk. The expectation is the numbers would be higher if factored out their own or others’ safety work.

Responses

Out of the 23 respondents, one rejected the premise, and two people did not respond to one of the two questions but answered the other one. The main issue respondents raised was answering without a time constraint.

Figure 1. Probability of human extinction from autonomous AI. N = 22. No one reported less than a 10% chance. Most people reported over a 60% chance.

Generally, people estimate the extinction risk from autonomous AI / AI getting out of control to be quite high at Conjecture. The median estimation is 70% and the average estimation is 59%. The plurality estimates the risk to be between 60% to 80%. A few people believe extinction risk from AGI is higher than 80%.

Figure 2. Probability of human extinction from either autonomous AI or AI misuse. N = 22. No one reported less than a 10% chance. Most people reported over a 60% chance. Three people reported an over 90% chance (not shown in Figure directly).

The second question surveying extinction risk from AI in general, which includes misalignment and misuse. The median estimate is 80% and the average is 71%. The plurality estimates the risk to be over 80%.

Section 2. When will we have AGI?

Setup and limitations

For this question, we asked respondents to predict when AGI will be built using this specification used on Metaculus, enabling us to compare to the community baseline (Figure 3).

Figure 3. Date of Artificial General Intelligence - screenshot from Metaculus’s specification (April, 2023).

The respondents were instructed to toggle with the probability density as seen in Figure 4. This was a deliberate choice to enable differences in confidence towards lower or higher values in uncertainty.

Figure 4. Date of Artificial General Intelligence - screenshot from Metaculus’s specification showing the toggle option people have for making their estimations (April, 2023).

The main caveats of this question were:

The responses are probably anchored to the Metaculus community prediction. The community prediction is 2031: 8 year timelines. Conjecture responses centering around a similar prediction should not come as a surprise.

The question allows for a prediction that AGI is already here. It’s unclear that respondents paid close attention to their lower and upper predictions to ensure that both are accordingly sensible. They probably focused on making their median prediction accurate, and might not have noticed how that affected lower and upper bounds.

Responses

Out of the 23 respondents, five did not answer this question, out of which one person rejected the premise. This resulted in 18 responses that were counted in the analysis.

Figure 5. When will we have AGI? (N = 18). People’s estimate of the median year they expect to have AGI has been visualized. Their lower 25% nor upper 75% bound has not been included. The purple star indicates the median prediction made by Metaculus users at the time the survey was conducted. The two most popular responses include the year 2030 (7 year timelines) and the year 2035 (12 year timelines).

Conjecture employees’ timelines are somewhat bimodal (Figure 5). Most people people answered either 2030 (7 year until AGI) or 2035 (12 years until AGI). The Metaculus community prediction at the time of the survey was 2031; respondents were likely anchored by this.

Table 1. Overview of additional statistics for when we will have AGI.

Table 1 shows additional markers for what the summary statistics look like across all respondents for the lower and upper bound predictions. Notably, the lower end of possible years where we will have AGI is maximum in the year 2030, which is still shorter timelines than what Metaculus users report as their overall median. The median prediction varies from 2027 to 2035. In terms of the upper 75% bound, the median prediction is the year 2039 but it varies all the way from 2029 to 2300, showing that the uncertainty towards the further end of the distribution is higher than for the years closer to 2023.

Latest Articles

Dec 2, 2024

Conjecture: A Roadmap for Cognitive Software and A Humanist Future of AI

Conjecture: A Roadmap for Cognitive Software and A Humanist Future of AI

An overview of Conjecture's approach to "Cognitive Software," and our build path towards a good future.

Feb 24, 2024

Christiano (ARC) and GA (Conjecture) Discuss Alignment Cruxes

Christiano (ARC) and GA (Conjecture) Discuss Alignment Cruxes

The following are the summary and transcript of a discussion between Paul Christiano (ARC) and Gabriel Alfour, hereafter GA (Conjecture), which took place on December 11, 2022 on Slack. It was held as part of a series of discussions between Conjecture and people from other organizations in the AGI and alignment field. See our retrospective on the Discussions for more information about the project and the format.

Feb 15, 2024

Conjecture: 2 Years

Conjecture: 2 Years

It has been 2 years since a group of hackers and idealists from across the globe gathered into a tiny, oxygen-deprived coworking space in downtown London with one goal in mind: Make the future go well, for everybody. And so, Conjecture was born.

Sign up to receive our newsletter and

updates on products and services.

Sign up to receive our newsletter and updates on products and services.

Sign up to receive our newsletter and updates on products and services.